Written by Viren Dias – Lead Data Scientist at Calcey

In recent months, ChatGPT and its uncanny ability to understand human language and mimic human responses had created quite the buzz around the Calcey office: it got us thinking about how we could incorporate it and its underlying technology into our products. We thought the best way to figure that out would be to trial it in an internal project, and for that, we needed an appropriate use case.

Our colleagues over at Human Resources (HR) had been spending their valuable time meticulously documenting HR policies in an internal wiki, only for us slackers to be too lazy to read it, and waste even more of their time by querying them directly! This sparked an idea — why not create a chatbot that could leverage the information contained within the HR wiki to answer questions related to HR policies?

What is ChatGPT?

ChatGPT is a large language model (LLM) trained to understand and respond to natural language conversations. As its name would suggest, it has been built on top of a Generative Pre-trained Transformer (GPT), more specifically its latest iteration, GPT-4.

GPT is a family of LLMs, trained on curated portions of the internet and human feedback, in order to predict the next token in a given document. In simpler terms, given a prompt, the model will attempt to predict what the response should be, using the internet as its source of knowledge. In the case of ChatGPT, the prompt is simply an incomplete chat log, and the model will attempt to predict what the next message should be.

How we adapted ChatGPT to our task

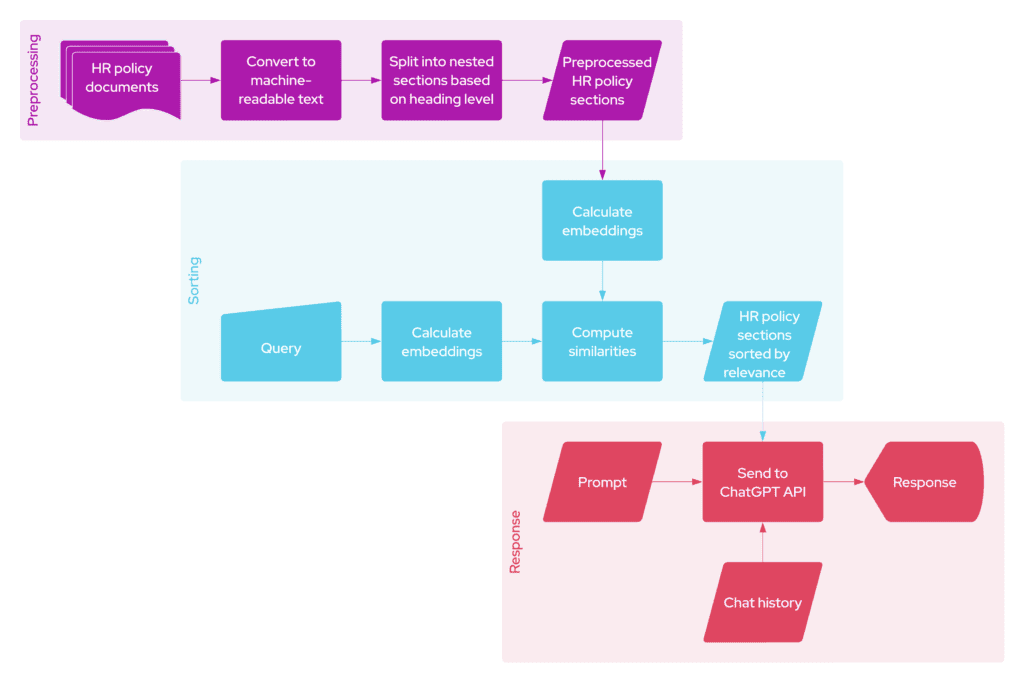

Figure 1: A flowchart of the processes involved in producing a response to a user-supplied query regarding HR policies.

At a high level, the processes involved in adapting ChatGPT to respond to questions related to HR policies can be aggregated into three distinct stages:

- A preprocessing stage, where we convert the HR policy documents into a machine-readable format and break them down into congruent sections.

- A sorting stage, where we sort the aforementioned sections by their relevance to the query.

- A response stage, where we supply the ChatGPT API with the necessary information to evoke an appropriate response.

We briefly discuss each of these stages and their associated engineering challenges below.

Preprocessing the HR policies

The HR policy documents are available in the form of wiki pages, which ChatGPT does not respond well to. Consequently, we needed to convert them into a ChatGPT-friendly format, which we identified iteratively through experimentation. This involved tasks such as:

- Stripping HTML tags,

- Running optical character recognition (OCR) software on images and infographics,

- Converting HTML tables into Markdown tables,

- Printing hyperlink URLs explicitly alongside their text,

- Etc.

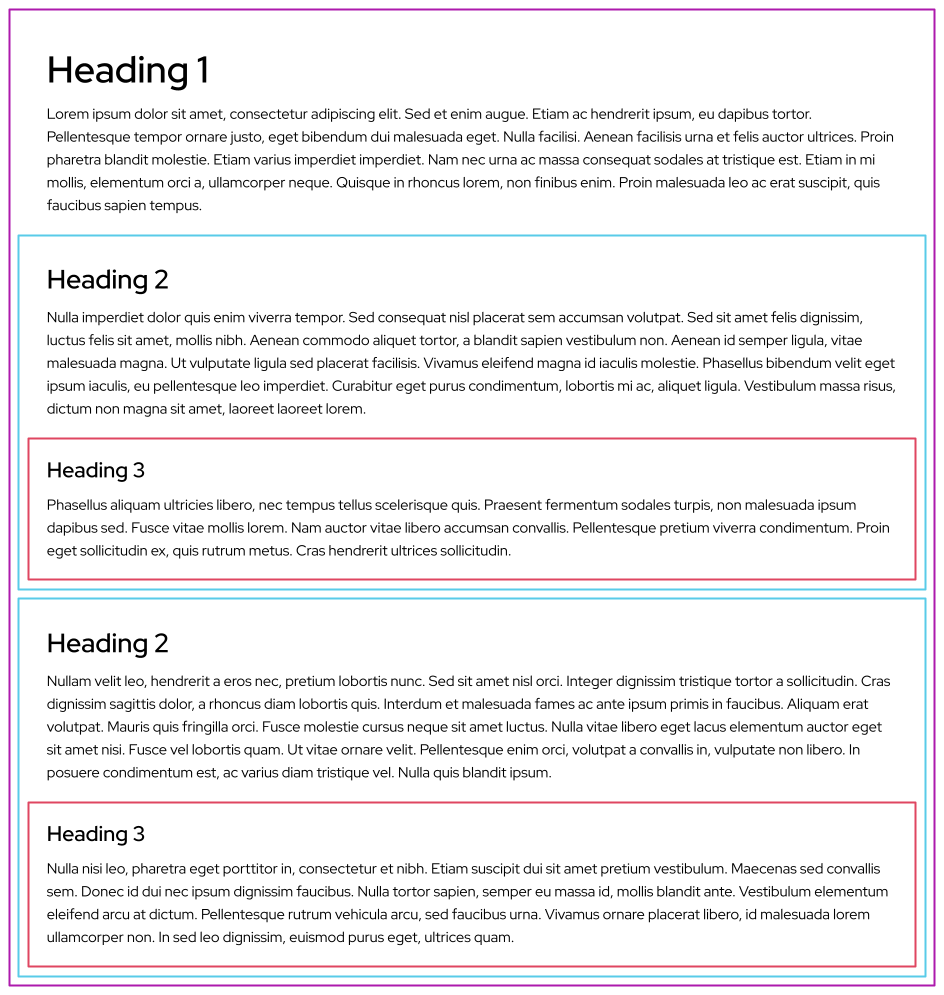

Figure 2: A visual depiction of the sectioning methodology. Each rectangle represents a separate section.

Additionally, some documents can get quite large and touch on several different HR policies: we needed to break these down into more manageable, congruent sections. We found that the best way to do this was to make use of heading levels and break down each document into nested sections, with each section containing all the sections hierarchically below it.

Sorting HR Policies by Relevance

In the interest of computational efficiency and accuracy of responses, we needed to sort the HR policy sections by their relevance to the query. To do this, we made use of text embeddings: a numerical representation of a text. A simple example of this would be tallying the occurrences of each word in a text. The embeddings of two texts can be input into a similarity function to easily determine how similar they are mathematical.

Once we calculated the embeddings of the query and all the HR policy sections, we computed the pairwise similarity. We then sorted the sections by the computed similarity to yield a list of HR policy sections ordered by their relevance to the query.

Evoking an appropriate response

To elicit a desired response from ChatGPT, we need to supply it with three pieces of information:

- The relevant HR policy sections to inform ChatGPT of the HR policies,

- The user-supplied query, and accompanying chat history provide context for the query, and

- A well-engineered prompt to instruct ChatGPT on how to respond using the above data.

The only thing missing is a good prompt. Engineering a good prompt is an iterative process that requires a lot of experimentation. It is not always as simple as providing instructions — ChatGPT has historically had an issue with hallucination, whereby the model incorrectly yet confidently responds to a question it does not know the answer to. However, with the right prompt, this issue can be solved.

The prompt we settled on was as follows:

“You are a helpful assistant working at Calcey. Answer the questions truthfully and explicitly, providing additional details when available. Use only the information contained within the wiki, and if the answer is not contained in the wiki, ask the user to contact HR.”

Q: What is the reward for introducing a senior software engineer?

A: The reward for introducing a Senior Software Engineer is an iPhone 13 128GB. Please note that certain conditions apply, such as the candidate must not have applied to Calcey before the referral email, and the referee will not join the interview process. The reward will be ready within one month from the date your friend starts working with Calcey. To refer someone, send their resumes to internal-referrals@calcey.com.(a) An example of a response containing additional relevant details to the query.

Q: How long can I stay in the dorm for?

A: The Calcey Dormitory Facility Policy does not explicitly mention a maximum duration for an employee's stay. If you have specific questions about the duration of your stay, it's best to contact HR for further clarification.(b) An example of how it responds to a query it should not know the answer to.

Figure 3: Examples of how the prompt influences ChatGPT’s response.

Breaking down the prompt and explaining what each sentence does:

- “You are a helpful assistant working at Calcey”. This ensures that the model responds politely and from the perspective of a colleague.

- “Answer the questions truthfully and explicitly, providing additional details when available”. Instructing the model to provide additional details results in the response including links to forms it refers to, contact details for people it refers to, etc. However, this increases the risk of the aforementioned hallucination issue.

- “Use only the information contained within the wiki, and if the answer is not contained in the wiki, ask the user to contact HR”. This greatly curbs the hallucination issue and provides explicit instructions on how to respond to questions the model does not know the answer to.

Closing Thoughts

ChatGPT is a very powerful tool that can be molded to suit a variety of use cases. However, since its inner workings are not precisely understood and its responses are stochastic in nature, it can be tricky to instruct it to do exactly what you want it to. As a result, it can require quite a bit of experimentation to get the desired outcome.